Comprehensive List of Researchers "Information Knowledge"

Department of Media Science

- Name

- TODA, Tomoki

- Group

- Architecture for Information Media Space Group

- Title

- Professor

- Degree

- Doctor degree in engineering

- Research Field

- Speech processing / Sound signal processing / Speech communication enhancement

Current Research

Speech and sound information processing techniques to make the impossible possible

Sound is a vibration propagating through a medium such as air. Although it is simply represented as a one-dimensional time-varying signal, it can convey diverse information. For instance, a speech signal, which is one of the typical sound signals produced by us, can simultaneously convey linguistic information (e.g., what is said), expression (e.g., how to utter), a speaker identity (e.g., who is speaking), and additional information generated through propagation, such as a location and sound environments (e.g., where is the speaker). Thanks to this convenience, speech is usually used as one of the most important communication media in our lives. As another sound signal intentionally generated by us, a music signal also plays an important role for enriching our lives.

My research interests include the development of the following information technologies to deal with these sound signals.

- 1) Speech and language information processing

Using some fundamental technologies, such as signal processing, machine learning, and reinforcement learning, various technologies for speech and language information processing are studied; e.g., speech analysis to parameterize the speech signal for extracting useful information; voice conversion to convert specific information by modifying the given speech signal; speech synthesis to generate the speech signal to convey the given information; speaker identification to identify a speaker from the given speech signal; speech recognition to convert the speech signal into text; and spoken dialog with semantic decoding, dialog management, and natural language generation. Towards the development of a mathematical model to represent our speech communication process, various applications of these technologies are also studied.

- 2) Music and acoustic information processing

Various technologies for music information processing are also studied; e.g., singing voice analysis to quantify singing voice characteristics; singing voice conversion enabling us to sing a song with any specific singer's voice timbre; generation of singing voice expression beyond our physical constraints to support music creative activities; and music signal separation to extract individual sound source signals. Multi-channel signal processing is also studied to handle acoustic information embedded in the sound signals.

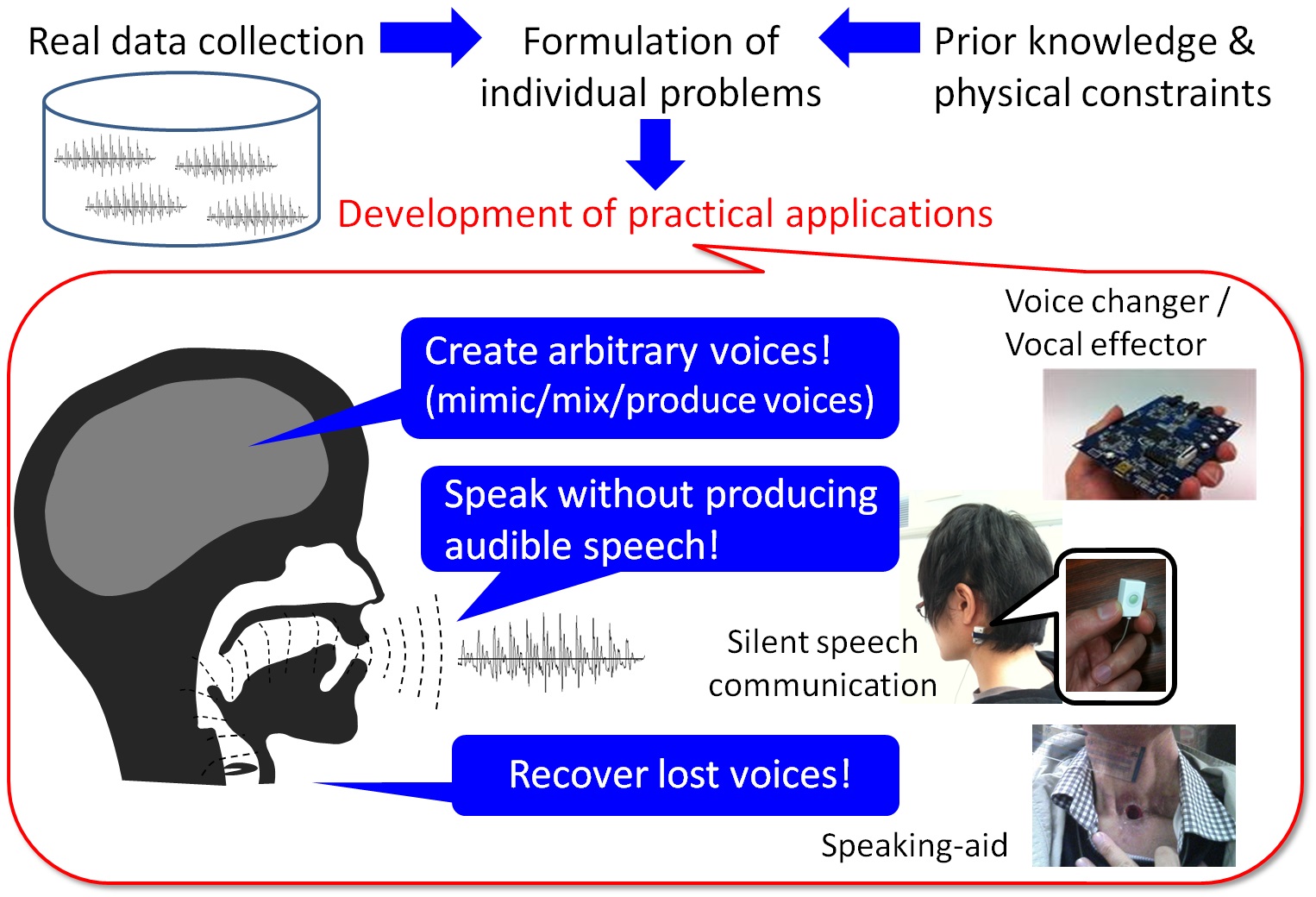

These speech and sound information technologies have large potential to improve our lives by making the impossible possible to break down existing barriers. As one of the typical examples, I introduce my research on augmented speech production for enhancing our speech communication (as also shown in the figure below). This technology has been developed using a statistical approach to voice conversion. After the development of a statistical model by formulating individual problems effectively using prior knowledge and physical constraints on speech production, the model is applied to real data. Some developed applications are shown below.

- 1) Alaryngeal speech enhancement for speaking-aid

People who have undergone total laryngectomy, called laryngectomees, cannot speak in the usual manner owing to the removal of their vocal folds. They need to use alternative speaking methods, such as an esophageal speaking method or a speaking method using an electrolarynx, to produce speech sounds. Although these methods are very helpful, the produced speech (called alaryngeal speech) sounds unnatural and less intelligible compared with natural voices, causing degradation of quality of life. To address this issue, we have developed alaryngeal speech enhancement methods for converting alaryngeal speech into natural voices using the real-time statistical voice conversion technique. We have also developed voice quality control methods for restoring their lost voice quality to recover speaker identities, cooperating with the voice banking project.

- 2) Body-conducted speech enhancement for silent speech communication

One of the body-conductive microphones, nonaudible murmur microphone has been developed to detect a very soft whispered voice. It allows us to speak less audible speech. However, the detected speech signal sounds unnatural and less intelligible due to a body-conductive recording mechanism. To make it possible to use such an unnatural and less intelligible speech in our speech communication, we have developed body-conducted speech enhancement methods based on the real-time statistical voice conversion technique to convert the body-conducted speech into air-conducted speech. These methods make it possible for us to use speech communication anytime and anywhere by addressing privacy issues or avoiding annoying others.

- 3) Voice changer / vocal effector for activating voice/music creative activities

The real-time statistical voice conversion technique enables us to produce various varieties of voice quality beyond our own physical constraints, e.g., to speak with a specific character's voice quality, or to sing a song with a specific singer's voice timbre. By further designing a small amount of manually controllable parameters to quantify voice quality, we have developed voice quality control techniques to manipulate converted voices as we want. These techniques are expected to activate voice/music creative activities.

These augmented speech production techniques have a great potential to bring a new style of speech communication to us, making it possible to achieve more convenient and useful speech communication. On the other hand, we should also look at the possibility to abuse these techniques. Therefore, we need to make these technologies visible and tell people about how to use them.

Career

-

Tomoki Toda received his B.E. degree from Nagoya University in 1999 and his M.E. and D.E. degrees from Nara Institute of Science and Technology (NAIST) in 2001 and 2003, respectively.

He was a Research Fellow of JSPS from 2003 to 2005. He was an Assistant Professor (2005-2011) and an Associate Professor (2011-2015) at NAIST. He was also a Visiting Researcher at CMU from October 2003 to September 2004 and at University of Cambridge from March to August 2008.

Since 2015, he has been a Professor at the Information Technology Center, Nagoya University.

Academic Societies

- IEEE

- ISCA

- IEICE

- IPSJ

- ASJ

Publications

- Voice conversion based on maximum likelihood estimation of spectral parameter trajectory, IEEE Transactions on Audio, Speech and Language Processing, Vol. 15, No. 8, pp. 2222-2235 (2007).

- Statistical mapping between articulatory movements and acoustic spectrum using a Gaussian mixture model, Speech Communication, Vol. 50, No. 3, pp. 215-227 (2008).

- Speech synthesis based on hidden Markov models, Proceedings of the IEEE, Vol. 101, No. 5, pp. 1234-1252 (2013).